Simultaneous Prediction of Valence / Arousal and Emotion Categories and its Application in an HRC Scenario

In this project, we first evaluate approaches for the automatic prediction of basic emotions and valence/arousal values for a circumplex model on several comprehensive in-the-wild databases.

Thereafter, we use the most promising approach in the context of Human Robot Cooperation Scenario, with the intention to detect also slight statistical variations of expressed fear or anger for different working situations.

Deep Learning based analysis of facial expressions using current comprehensive in-the-wild-databases

We address the problem of emotional state detection from facial expressions. Our proposed approach simultaneously detects faces and predicts both discrete emotion categories and continuous valence/arousal values from raw input images. We train and evaluate our approach on 3 different datasets, compare our approach to other state-of-the-art approaches, and perform a cross-database evaluation. In this way, we found, that our approach generalizes well and is suitable for real-time applications.

|

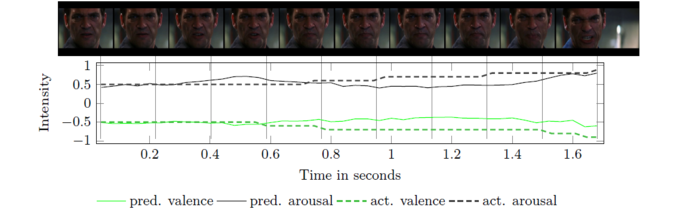

| Predictions and actual values for valence and arousal over time for a video samples of the AFEW-VA database. |

|

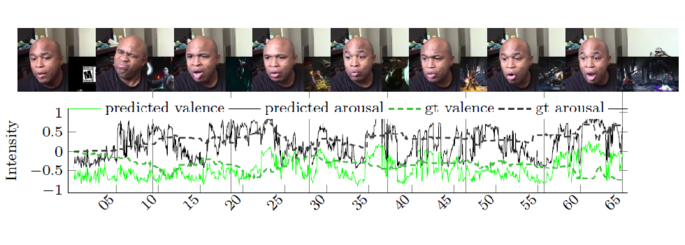

| Predictions and actual values for valence and arousal over time for a video sample of the Aff-Wild database. |

Human Robot Cooperation Scenario

At Fraunhofer IWU Chemnitz, a Human-Robot Cooperation (HRC) scenario with a heavy industry robot arm has been prepared to investigate how fear or frustration occurs and how it can be minimized using divers feedback systems. Therefore, three scenarios with additional difficulties (S1-S3) and one with a normal procedure (S4) were designed.

The procedure always includes different phases:

- At first, the robot arm fetches a front axle beam from a cupboard

- Then it moves it into the shared workspace

- The human worker enters the shared workspace

- The human worker uses gesture control to optimize the heights of the front axle beam and starts to fix colored buttons at predefined positions within a limited time range

- The robot transports the front axle beam to another cupboard (cycle complete)

The task will be repeated four times in each scenario, so there are 4 cycles. The following errors will occur in cycle 1 & 3:

- S1: Gesture control will be inverted (restart of robot required)

- S2: Gesture control will be inverted + cycle time will be reduced by 50%

- S3: cycle time will be reduced by 50%

- S4: No error (normal procedure)

We repeat all experiments at first for subjects from NF-group, that will not get any feedback. This means, that subjects will be not informed about the occurred reason for the gesture control error and that the robot will have to restart. There is also no information given about the reduction of the time per cycle. Subjects of the F-group will perform the same experiments, however, they will be informed about occurred gesture errors and reduced cycle times by a screen and colored LED-lights on the robot.

We found that

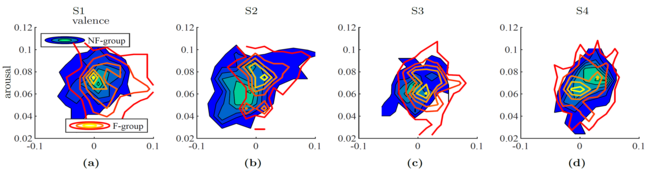

- The median valence of the NF-group subjects decreases for the scenarios with additional difficulties (S1-S3), compared to the normal scenario (S4)

- This effect could be normalized for F-group by the used feedback

Distribution of valence / arousal values in scenarios S1-S4 for the F and NF group. If no errors occur (S4), both distributions are almost congruent. If an error occurs, the subjects in the NF group show a slightly more negative valence (S1+S3). The effect is largest when two errors occur in parallel (S2).

|

Example of realtime detection of valence / arousal on theAff-wild database. |

Video of the environment of the HRC-Scenario at Fraunhofer IWU Chemnitz. |