The BioVid Heat Pain Database

Updates

- Aug 26, 2022: updated instructions how to get access to the data

- Feb 19, 2018: added instructions how to get the BioVid Emo DB and [13]

- Jan 18, 2018: added [12] and updated evaluation section

Motivation

The assessment of acute pain is one of the basic tasks in clinics. To this day, the common practice is to rely on the utterance of the patient. For mentally affected patients this is little reliable and valid. For non-vigilant people or newborns it cannot be used at all. However, there are several characteristics that indicate pain. These include specific changes in the facial expression and in psychobiological parameters like heart rate, skin conductance or electrical activity of skeletal muscles. Such information can be used to assess pain without self-report of the patient.

Overview on the Database

To advance methods for pain assessment, in particular automatic assessment methods, the BioVid Heat Pain Database was collected in a collaboration of the Neuro-Information Technology group of the University of Magdeburg and the Medical Psychology group of the University of Ulm. In our study, 90 participants were subjected to experimentally induced heat pain in four intensities. To compensate for varying heat pain sensitivities, the stimulation temperatures were adjusted based on the subject-specific pain threshold and pain tolerance. Each of the four pain levels was stimulated 20 times in randomized order. For each stimulus, the maximum temperature was held for 4 seconds. The pauses between the stimuli were randomized between 8-12 seconds. The pain stimulation experiment was conducted twice: once with un-occluded face and once with facial EMG sensors.

The participants also posed basic emotions and pain. Furthermore we also elicited basic emotion with video clips.

You can find more details on the study and the dataset in [1], [2], and [13].

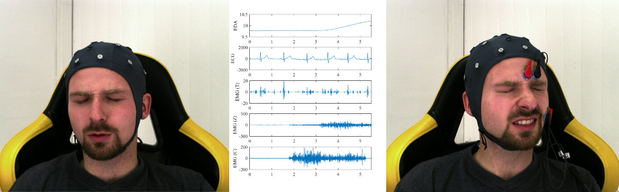

Example of the measuring configuration with induced pain stimulus.

Distribution of the Data

The database is available for non-commercial research only. The Medical Psychology group of the Ulm University is responsible for authorizing the access to the data. To receive Part A-D, you or your advisor (a permanent position in an academic institute is required, that is, students have to ask their professor) have to fill in and sign the agreement form and send it to . For Part E (emotion elicitation), which is also called BioVid Emo DB, sign this agreement form and send it to .

Data Organization

The database consists of the following parts:

- Part A: Pain stimulation without facial EMG (short time windows)

- Part B: Pain stimulation with facial EMG (partially occluded face, short time windows)

- frontal video, biomedical signals (GSR, ECG, EMG at trapezius, corrugator and zygomaticus muscle) as raw and preprocessed signals

- 8,600 samples; 86 subjects (84 of them also occur in Part A), 5 classes, 20 samples per class and subject; time windows of 5.5 seconds

- used in [4], [5], [7], [8] to classify pain intensity

- Part C: Pain stimulation without facial EMG (long videos)

- Part D: Posed pain and basic emotions with a case vignette

- frontal video, biomedical signals (GSR, ECG, and EMG at trapezius muscle) as raw data

- 630 sequences (each 1 min), 90 subjects, 7 categories (happiness, sadness, anger, disgust, fear, pain, and neutral)

- Part E: Emotion elicitation with video clips

- also called BioVid Emo DB

- frontal video, biomedical signals (GSR, ECG, and EMG at trapezius muscle) as raw and preprocessed data

In the future more parts may be added. If you publish experimental results, please refer to the part(s) you have used.

Please note that EEG is not available.

Evaluation Procedure for Recognition Systems

To improve comparability of recognition performances we suggest the following experimental procedure. Performances should be estimated with leave-one-subject-out cross validation with all the available subjects and samples. Results obtained on subsets are not comparable in general. Model, feature, and parameter selection should be done in each fold of the cross validation; if you use the whole database for tuning before cross validation, the performance estimate will be biased. A subject-specific feature space transform (like subject-specific standardization in [3]) is allowed to compensate for differences in expressiveness, but it should be explained in your paper. The transform may use all samples, but (of course) no labels. Please name the part of the database that you used.

In [12] we propose to use a subject subset that excludes those study participants who did not react visibly to the applied pain stimuli. The 20 excluded subjects are: 082315_w_60, 082414_m_64, 082909_m_47, 083009_w_42, 083013_w_47, 083109_m_60, 083114_w_55, 091914_m_46, 092009_m_54, 092014_m_56, 092509_w_51, 092714_m_64, 100514_w_51, 100914_m_39, 101114_w_37, 101209_w_61, 101809_m_59, 101916_m_40, 111313_m_64, 120614_w_61. A leave-one-subject-out cross-validation should be conducted with the remaining 67 subjects (not listed here). If you use this subject subset, please cite [12]. For experiments using part A you can compare your results with ME, line 5 of Table 2.

For deep learning a less computationally expensive alternative evaluation method has been proposed by Stefanos Gkikas, who suggests to use the data split detailed in this PDF file.

If you deviate from the recommendations, you should clarify the reasons and that comparability with other results is limited. If you use subject subsets, please define them precisely (e.g. in supplementary material). We are open to suggestions for additional or alternative recommendations (including subject subsets); with good reasons you may convince us to post them here.

Related Publications

- [1]

- Philipp Werner, Ayoub Al-Hamadi, Robert Niese, Steffen Walter, Sascha Gruss, Harald C. Traue

Towards Pain Monitoring: Facial Expression, Head Pose, a new Database, an Automatic System and Remaining Challenges

Proceedings of the British Machine Vision Conference, BMVA Press, 2013. - [2]

- Steffen Walter, Philipp Werner, Sascha Gruss, Harald C. Traue, Ayoub Al-Hamadi, et al.

The BioVid Heat Pain Database: Data for the Advancement and Systematic Validation of an Automated Pain Recognition System

Proceedings of IEEE International Conference on Cybernetics, 2013. - [3]

- Philipp Werner, Ayoub Al-Hamadi, Robert Niese, Steffen Walter, Sascha Gruss, Harald C. Traue

Automatic Pain Recognition from Video and Biomedical Signals

Proceedings of the International Conference on Pattern Recognition (ICPR), pp 4582-4587, 2014. - [4]

- Markus Kächele, Philipp Werner, Steffen Walter, Ayoub Al-Hamadi, Friedhelm Schwenker: Multimodal Fusion of Bio-Visual Signals for Person-Independent Recognition of Pain Intensity. In Proceedings of the 2nd Workshop on Machine Learning for Clinical Data Analysis, Healthcare and Genomics (NIPS 2014), (in print).

- [5]

- M. Kächele, Philipp Werner, A. Al-Hamadi, G. Palm, S. Walter, F. Schwenker

Bio-Visual Fusion for Person-Independent Recognition of Pain Intensity

Multiple Classifier Systems (MCS), 2015. - [6]

- Markus Kächele, Patrick Thiam, Mohammadreza Amirian, Philipp Werner, Steffen Walter, Friedhelm Schwenker, Guenther Palm

Multimodal data fusion for person-independent, continuous estimation of pain intensity

Engineering Applications of Neural Networks (EANN), 2015. - [7]

- S. Walter, S. Gruss, K. Limbrecht-Ecklundt, H. C. Traue, Philipp Werner, A. Al-Hamadi, A. O. Andrade, N. Diniz, G. M. da Silva

Automatic pain quantification using autonomic parameters

Psychology and Neuroscience, 11/2014. - [8]

- Sascha Gruss, Roi Treister, Philipp Werner, Harald C. Traue, Stephen Crawcour, Adriano Andrade, Steffen Walter

Pain Intensity Recognition Rates via Biopotential Feature Patterns with Support Vector Machines

PLoS ONE 10(10), 2015. - [9]

- Philipp Werner, A. Al-Hamadi, K. Limbrecht-Ecklundt, S. Walter, S. Gruss, H. C. Traue

Automatic Pain Assessment with Facial Activity Descriptors

IEEE Transactions on Affective Computing PP(99), 2016. Impact-Factor (2015): 1.873 - [10]

- Philipp Werner, Ayoub Al-Hamadi, Steffen Walter, Sascha Gruss, Harald C. Traue

Automatic Heart Rate Estimation from Painful Faces

International Conference on Image Processing (ICIP), pp 1947-1951, 2014. - [11]

- Michal Rapczynski, Philipp Werner, Ayoub Al-Hamadi

Continuous Low Latency Heart Rate Estimation from Painful Faces in Real Time

International Conference on Pattern Recognition (ICPR), 2016. - [12]

- Philipp Werner, Ayoub Al-Hamadi, and Steffen Walter

Analysis of Facial Expressiveness During Experimentally Induced Heat Pain

International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), 2017. - [13]

- Lin Zhang, Steffen Walter, Xueyao Ma, Philipp Werner, Ayoub Al-Hamadi, Harald C. Traue, and Sascha Gruss

"BioVid Emo DB": A Multimodal Database for Emotion Analyses validated by Subjective Ratings

Proc. IEEE Symposium Series on Computational Intelligence, 2016. - [14]

- Philipp Werner, Daniel Lopez-Martinez, Steffen Walter, Ayoub Al-Hamadi, Sascha Gruss, Rosalid Picard

Automatic Recognition Methods Supporting Pain Assessment: A Survey (ResearchGate)

IEEE Trans. on Affective Computing, 2019, DOI: 10.1109/TAFFC.2019.2946774. Impact-Factor (2018): 6.288

Contact

Steffen Walter, Ayoub Al-Hamadi, Harald C. Traue, Philipp Werner

Acknowledgement

The data collection was funded by the Deutsche Forschung Gemeinschaft, project Weiterentwicklung und systematische Validierung eines Systems zur automatisierten Schmerzerkennung auf der Grundlage von mimischen und psychobiologischen Parametern.